Sometimes, a photo doesn't tell the whole story—not because of what's captured, but because of how clearly it's seen. Blurry, low-resolution images are common, especially when dealing with old files, digital compression, or limited hardware. While traditional upscaling tools can stretch images, they often fall short of restoring real detail. Super-Resolution Generative Adversarial Networks, or SRGANs, take a smarter approach.

Rather than guessing pixel values, they learn patterns from high-quality images to rebuild missing detail in a way that feels natural. SRGANs aren’t just sharpening photos—they’re redefining how we bring clarity back to images that once seemed beyond repair.

How SRGANs Work and What Makes Them Different?

Standard upscaling methods, such as bilinear or bicubic interpolation, rely on estimating missing pixels using mathematical formulas. These often result in soft, fuzzy images. SRGANs go further by generating high-resolution images through learned patterns, producing visuals that feel sharper and more convincing.

SRGANs consist of two main parts: a generator and a discriminator. The generator tries to create a high-res version of a low-res image while the discriminator evaluates whether the generated image is real or fake. Through continuous feedback between these two networks, the generator improves over time, learning how to produce images that can fool the discriminator.

What makes SRGANs effective is their use of perceptual loss. Rather than focusing only on pixel accuracy, they aim for visual similarity by comparing the deeper features of images using a pre-trained model like VGG19. This allows them to generate results that may not be mathematically identical but look more realistic to the human eye.

This method helps SRGANs add texture and clarity to images in a way that older algorithms can’t. The generator isn't just filling in missing pixels—it’s generating believable details based on patterns learned during training.

Real-World Applications and Use Cases

One of the most visible uses of SRGANs is in photography. Low-resolution images from older cameras or compressed files can be enhanced to meet modern display needs. Graphic designers and publishers often rely on such tools to make images print-ready without requiring new photoshoots.

In security, surveillance systems often produce footage in low resolution to conserve bandwidth. When clarity becomes critical, SRGANs can enhance facial details or objects in frames, making analysis more effective. While not foolproof, these enhancements can improve visibility and support decision-making.

In healthcare, SRGANs have been explored in medical imaging. CT scans or MRIs taken at lower resolutions can be refined for clearer interpretation. Although this area needs careful validation, the potential to improve image quality without additional scans is appealing.

Online retail also benefits from SRGANs. Sellers can upscale product images without rephotographing items, maintaining sharpness even at larger sizes. This supports better presentation across websites, apps, and promotional materials.

Old films and TV shows are another area where SRGANs are making a mark. Restoration projects use them to upgrade footage to HD or 4K, reducing manual frame-by-frame work. These enhancements preserve the original feel while updating the visual quality for modern screens.

Benefits, Limitations, and the Balance Between Realism and Accuracy

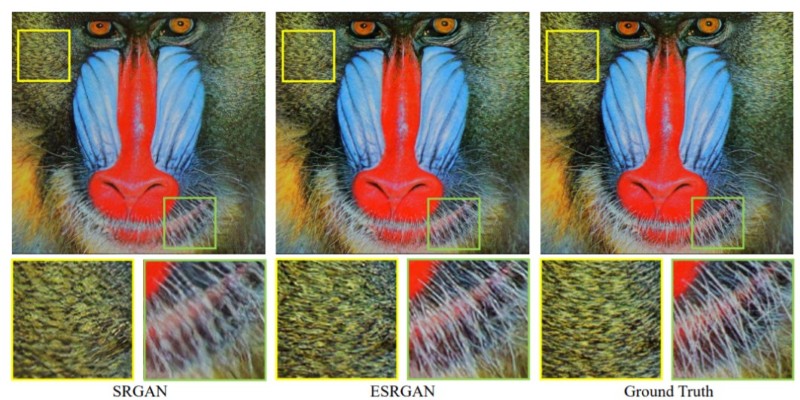

The strongest benefit of SRGANs lies in how real their results appear. By recreating fine textures and sharp edges, they provide images that feel authentic. Compared to interpolation, the improvement in visual quality is significant.

However, SRGANs aren’t perfect. They guess missing details based on training data, which means they might introduce elements not present in the original. This is less of a concern in general use but becomes an issue in settings like forensics or scientific analysis, where accuracy matters more than appearance.

Training SRGANs also requires significant computing power and large datasets. Although pre-trained models are available, they may not perform well across every type of image. Differences in lighting, texture, or subject matter can affect results.

Another issue is reliability. Some generated details may appear too artificial or inconsistent. In these cases, SRGANs may require fine-tuning or additional processing to avoid misleading visuals. Developers must weigh realism against fidelity, depending on the specific application.

Despite these challenges, SRGANs offer a strong improvement over traditional methods. For many use cases—especially where the final image is meant for visual appeal rather than technical precision—their benefits outweigh the trade-offs.

Future Outlook and How SRGANs Are Evolving?

SRGANs have sparked development in newer models like ESRGAN (Enhanced SRGAN) and Real-ESRGAN. These improve the original framework by reducing visual artifacts and maintaining more accurate textures. They're designed to work better across a wider range of images, including those with noise or distortion.

There is growing interest in using SRGANs for real-time processing, particularly in video streaming and mobile applications. Faster, lighter models are being developed to deliver super-resolution without slowing down devices. This would make image enhancement accessible in more settings, from live video calls to augmented reality.

Self-supervised learning is another promising direction. Instead of relying on carefully matched low- and high-resolution image pairs, models can train with fewer labeled examples. This reduces the effort needed to build datasets and broadens the technology’s reach.

Ethical concerns are also being considered. As AI-generated images become harder to distinguish from originals, there’s an increasing need for transparency. Methods like watermarking or metadata tagging are being explored to identify generated content and prevent misuse.

SRGANs are no longer just experimental tools. They’re being integrated into everyday applications and workflows. As they improve, the range of tasks they can handle—while maintaining realism and control—continues to expand.

Conclusion

SRGANs have reshaped how we handle image quality problems. Instead of relying on basic upscaling, they utilize learned data patterns to produce detailed, sharp results that appear natural. Their ability to bridge the gap between low-res and high-res images has made them useful across fields from digital media and security to healthcare and retail. While they can't guarantee exact reproduction and come with some risks, especially in high-accuracy settings, their impact is clear. As newer models improve performance and reduce limitations, SRGANs are becoming part of standard image enhancement tools, bringing old or low-quality visuals back to life with surprising clarity.