Let’s be honest: AI papers aren’t always the easiest thing to read. Some make your eyes glaze over before you even hit the abstract. But every once in a while, a few of them really hit the mark. This year at ICLR 2024, a handful of papers stood out—not because they used fancier words or showed off equations, but because they brought something fresh to the table. Whether it was a surprising trick that boosted model performance or a new way to think about fairness, these papers gave us something to talk about. So here they are: the ones that made noise for the right reasons.

Best Research Papers on AI: ICLR 2024 Outstanding Paper Awards

Mixtral of Experts: Efficient Sparse Mixture-of-Experts for Language Models

This one caught everyone’s attention for good reason. Mixtral ditches the idea that every part of a model has to work all the time. Instead, it lets only a few parts of the network “wake up” for each input. It’s like calling in only the two best experts for a particular question instead of the whole panel. Not only does this cut down on the amount of computation, but it also makes large models feel a lot lighter without trimming off their skills. Pretty clever if you're tired of models that feel overbuilt.

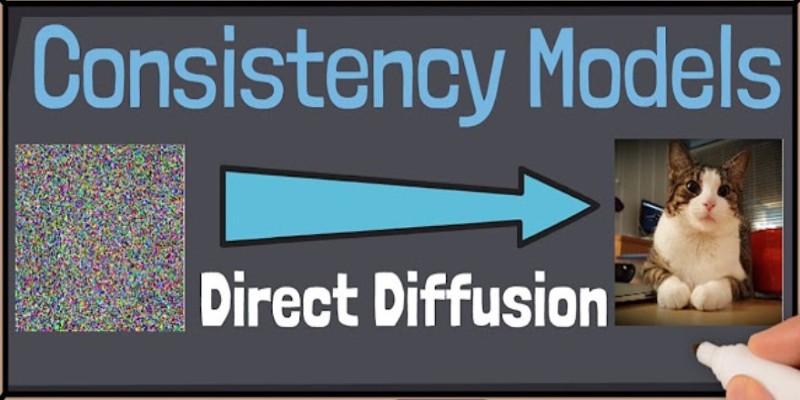

Consistency Models: Sampling Fast and Sharp

Let’s face it: diffusion models have been a bit slow. They're good at generating images, but the wait isn't fun. That's where Consistency Models come in. This paper proposed a way to skip most of the back-and-forth steps and still end up with a clean, detailed result. It's fast, and the samples come out sharp—no weird smudges or blurry edges. If you've ever used AI art tools and wanted them to just hurry up already, this paper basically had the same thought and did something about it.

Evo: Scaling Visual Imitation with Life-Long Memory

If you’ve ever seen a robot struggle to learn the same thing over and over, this one’s for you. Evo introduced a way to let agents "remember" visual tasks across episodes—without confusing them when things change. It's not just about learning faster but learning better. They trained agents to pick up new tasks by drawing on old ones, sort of like how we remember brushing our teeth even if the bathroom changes. And weirdly enough, the longer it trained, the more stable it got, which is rare in AI.

Self-Rewarding Language Models

Here’s a weird question: what if language models could reward themselves? No, not in a sci-fi way. This paper showed that large language models can actually come up with their own reward signals to improve their answers without needing a human thumbs-up every time. It’s a bit like grading your own homework and actually getting better at it. They showed that with a few adjustments, models could self-improve on instruction-following tasks. Less hand-holding, more autonomy. Feels like a step toward models that learn on their own terms.

- ZipLoRA: Efficient Fine-Tuning for Low-Rank Adaptation

Fine-tuning giant models usually comes with a trade-off: time or money. ZipLoRA found a way to fix that. It takes the concept of Low-Rank Adaptation and compresses it down without losing the precision. What makes this different is the way it handles memory and storage—it’s compact, and the performance holds steady. Ideal for anyone who wants to fine-tune models but doesn’t want to rent a server farm to do it.

Aligning AI with Values Through Recursive Critique

This one took a different route—more on the philosophical side, but not in a fuzzy way. The paper suggested that instead of training AI with hardcoded "good" answers, you let it reflect on its outputs through recursive critique. Basically, the model reviews its own responses and adjusts. Think of it like having a conversation with yourself to double-check your decisions. Surprisingly, this helped it land more human-aligned answers without needing a checklist of moral dos and don'ts.

Open Vocab 3D Object Detection with Multimodal Models

Here's a cool one if you're into computer vision. Most 3D object detectors struggle when asked to identify anything outside their training set. This paper introduced a method that uses both image and text data to fix that. You point a model at a room, and instead of saying "unknown object," it guesses "coffee mug" or "router" based on multimodal clues. No retraining is needed. This kind of flexibility makes it feel closer to how we naturally look at the world and label what we see.

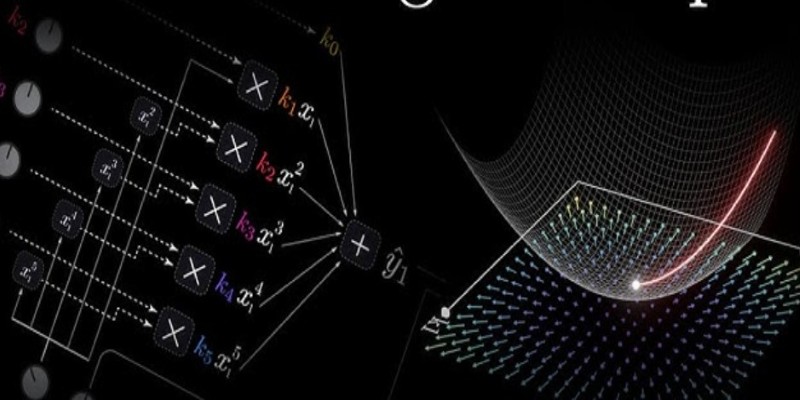

GaLore: Memory-Efficient Backpropagation

Backpropagation has always had one big flaw—it eats up memory. GaLore changes how gradients are stored and used, making the backdrop feel less like a RAM guzzler. They restructured the training process so you can still get accurate updates without constant overload. What stands out is how cleanly it fits into existing pipelines. You don't need to reinvent your entire system to use it. For folks working on limited hardware, this one's a relief.

Token Merging for Vision Transformers

This one slipped in quietly but turned out to be a solid win for efficiency. Token Merging takes the idea that not every token in an image needs the same attention and runs with it—literally merging less informative tokens during processing. It's like cleaning up clutter, so the model only focuses on what really matters. The surprise? You don’t lose much accuracy, but you save a lot on compute. That’s especially helpful when working with high-res images where things tend to slow down fast.

A Few Final Thoughts

Not every paper on AI needs to change the world. Some of them just need to make it work a little better. What the ICLR 2024 awards highlighted was that it's often the simplest shift in how we frame a problem—or tweak a method—that ends up sparking real progress. Whether it's giving models memory, teaching them to think twice, or just helping them run smoother, the papers this year showed that innovation doesn't always have to look flashy. Sometimes, it just looks like things are working the way they should have all along.